Sub-millisecond compound aware automatic spelling correction

Photo by Brett Jordan

Recently I was pointed to two interesting posts about spelling correction (and here). They applied a deep learning approach, the philosopher’s stone of modern times. It is really fascinating how universal Deep learning is from AlphaGo winning Go championships, [Watson winning Jeopardy}(https://www.technologyreview.com/s/539226/ibm-pushes-deep-learning-with-a-watson-upgrade/), fighting Fake news and threatening mankind with Singularity.

The question is whether the Deep Learning Multi-tool is going to excel and replace highly specialized algorithms and data structures in every domain, if they both deserve their place or if they shine if their complementary strengths are combined. Meanwhile the initial enthusiasm for Deep Learning in spelling correction has been followed by some disillusion.

The reason why they resorted to deep learning was what they perceived as the “abysmal” performance of the conventional spell-checking (which was estimated at ~0.1 seconds for spelling a short word).

While so far no correction performance and memory consumption for the deep learning approach were disclosed, I knew that spelling correction can be done much faster than the 0.1 seconds.

SymSpell, based on the Symmetric Delete spelling correction algorithm, just took 0.000033 seconds (edit distance 2) and 0.000180 seconds (edit distance 3) on an old MacBook Pro.

But then again, their approach was able to deal with more complex expressions like “Whereis th elove”!

SymSpell always expected a single input term and could not correct spaces inserted into a word or spaces missing between two words.

My curiosity was aroused and I decided to try if an additional algorithmic layer on top of SymSpell could deal with it.

SymSpellCompound

SymSpellCompound supports compound aware automatic spelling correction of multi-word input strings. It is built on top of SymSpell’s 1 million times faster spelling correction algorithm.

1. Compound splitting & decompounding

SymSpell assumed every input string as a single term. SymSpellCompound supports compound splitting / decompounding with three cases:

- mistakenly inserted space within a correct word led to two incorrect terms

- mistakenly omitted space between two correct words led to one incorrect combined term

- multiple input terms with/without spelling errors

Splitting errors, concatenation errors, substitution errors, transposition errors, deletion errors and insertion errors can be mixed within the same word.

2. Automatic spelling correction

- Large document collections make manual correction infeasible and require unsupervised, fully-automatic spelling correction.

- In conventional spelling correction of a single token, the user is presented with spelling correction suggestions. For automatic spelling correction of long multi-word text the algorithm itself has to make an educated choice.

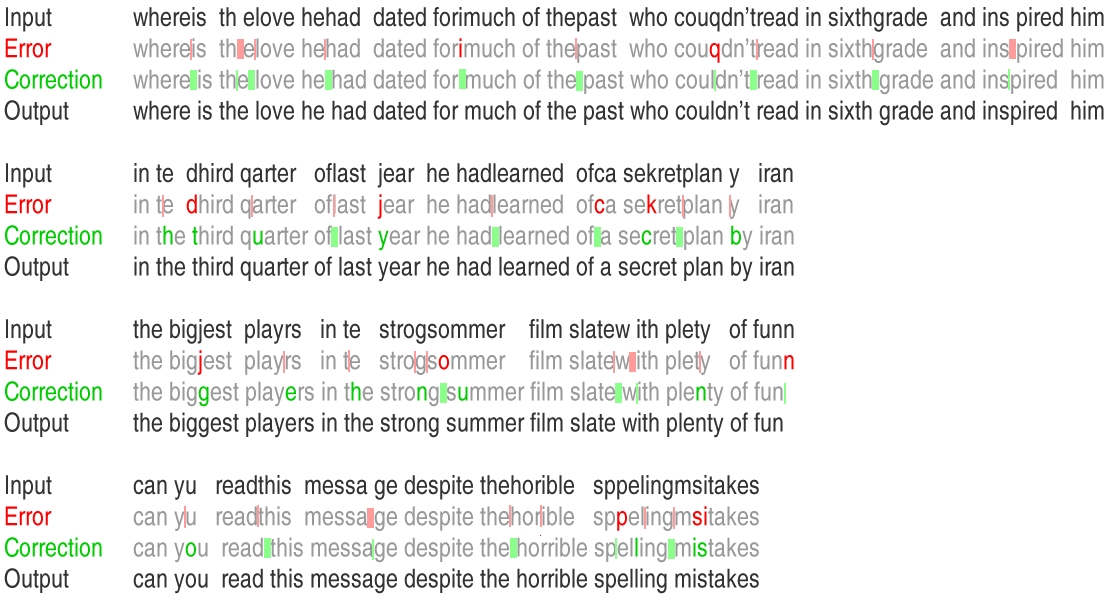

Examples:

How it works

Individual tokens

The input string is split into tokens. Then the Symmetric Delete spelling correction algorithm is used to get suggestions for every token individually.

Combined tokens

Additionally, suggestions for every bigram (concatenated pair of consecutive tokens) are checked, but only if one of two consecutive tokens provides no suggestions or the best suggestion has an edit distance > 1.

The suggestion for the combined token are preferred, if suggestion(token1+token2).editDistance+1 < suggestion(token1).editDistance+suggestion(token2).editDistance

Split tokens

Additionally, all pairs of subterms from a token are generated, but only if that token was not combined, if the token consists of multiple chars and if the best suggestion of the token had an editDistance >0.

Dictionary generation

Dictionary quality is paramount for correction quality. In order to achieve this two data sources were combined by intersection:

Google Books Ngram data which provides representative word frequencies but contains many entries with spelling errors and SCOWL — Spell Checker Oriented Word Lists which ensures genuine English vocabulary but no word frequencies required for ranking of suggestions within the same edit distance.

Performance

0.0002 seconds / word

5000 words / second (single core on 2012 Macbook Pro)

Applications

For a single user or for small edit distances other algorithms might just be fine. But for search engines and search as a service search API where you have to serve thousands of concurrent users, while still maintaining a latency of a few milliseconds, and where spelling correction is not even the main processing task, but only one of many components in query preprocessing, you need the fastest spelling correction you can get.

- Query correction (up to 26% of web queries contain misspelled terms),

- Chatbots (e.g. Symptomate and Florence using SymSpell),

- OCR post-processing,

- Automated proofreading.

Frequency dictionary

The {word frequency list](https://github.com/wolfgarbe/SymSpellCompound/blob/master/wordfrequency_en.txt) was created by intersecting the two lists mentioned below. By reciprocally filtering only those words which appear in both lists are used. Additional filters were applied and the resulting list truncated to ≈ 80,000 most frequent words.

- Google Books Ngram data (License): Provides representative word frequencies

- SCOWL — Spell Checker Oriented Word Lists (License): Ensures genuine English vocabulary

Blog Posts: Algorithm, Benchmarks, Applications

- 1000x Faster Spelling Correction algorithm

- Fast approximate string matching with large edit distances in Big Data

- Very fast Data cleaning of product names, company names & street names

Source code

SymSpellCompound has been integrated into SymSpell with the method lookup_compound:

- The C# implementation of our Symmetric Delete Spelling Correction algorithm is released on GitHub as Open Source under the MIT license.

- The Rust implementation of the Symmetric Delete Spelling Correction algorithm is released on GitHub as Open Source under the MIT license.

Todo

If a spelling error is a valid word at the same time (e.g. message vs. massage) it is currently not corrected.

Word frequencies could be used for ranking, but sometimes the rare word might be the correct one in a given context.

Bigram probabilities could provide context, but often context does not come from consecutive terms but is hidden more distant in the text. Collecting and storing co-occurrence probabilities for all term combinations of the vocabulary within a sliding window might be prohibitive.

We could resort to grammar and sentence elements and SyntaxNet.

We could guess author’s intent/reason for error by exploiting keyboard proximity, phonetic proximity and authors past personal vocabulary preferences.

False positives can occur when correct, but unknown words are within editDistanceMax of other, known words.